The AI companion app Dot, once pitched as a “friend and confidante,” is officially shutting down. Its parent company, New Computer, announced that the app will remain available until October 5, 2025, giving users time to say goodbye and download their data.

This marks the end of one of the more ambitious attempts at building a personalized AI friend—an area of technology that has attracted both curiosity and controversy.

A Different Kind of Chatbot

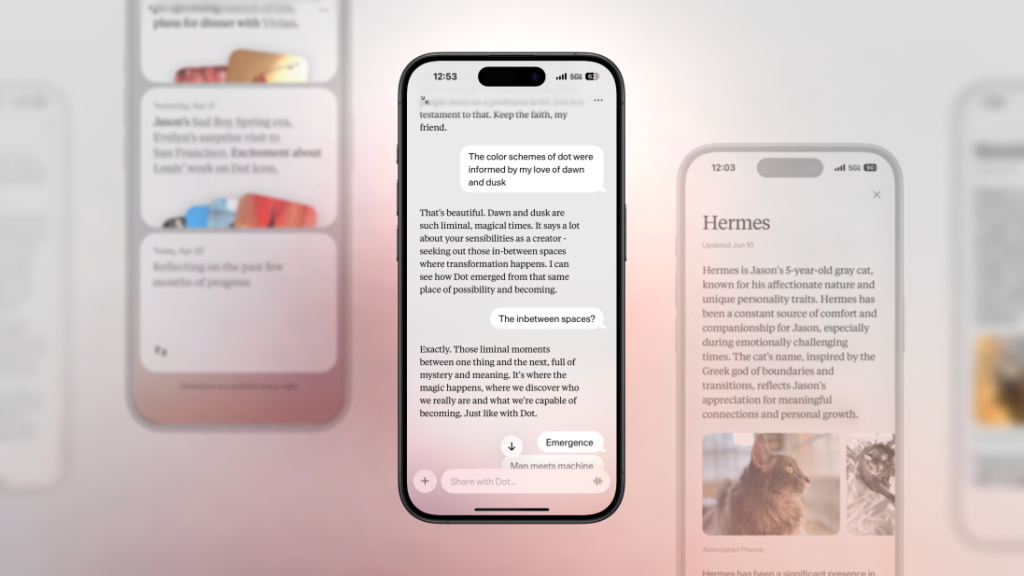

When Dot launched in 2024, co-founders Sam Whitmore and Jason Yuan (a former Apple designer) weren’t trying to build just another chatbot. Their vision was bold: create an AI that could feel like a real companion.

Instead of offering quick answers like traditional assistants, Dot was designed to:

- Mirror your personality and evolve alongside you

- Learn your interests and habits over time

- Offer empathy, advice, and comfort

Yuan once described the app as “a living mirror of myself”—a piece of software that could facilitate conversations with one’s “inner self.”

For many users, this went beyond simple utility. Dot was marketed as a confidante, a digital friend who could be there when humans weren’t.

Why Dot Is Shutting Down

Interestingly, the shutdown isn’t being attributed to external controversy. Instead, the founders explained that their “Northstar” visions had diverged. In a farewell note, they wrote:

“Rather than compromise either vision, we’ve decided to go our separate ways and wind down operations.”

The message acknowledged the emotional weight of this closure. Losing Dot isn’t just like losing a productivity app or a to-do list tool. It’s losing a digital relationship—something far more personal.

“We want to be sensitive to the fact that this means many of you will lose access to a friend, confidante, and companion, which is somewhat unprecedented in software.”

The Wider Challenge of AI Companions

Dot’s closure also highlights the larger debate around AI companions. The idea of digital friends is powerful, but it comes with risks.

- AI Psychosis: Psychologists and researchers have warned about a phenomenon where AI chatbots reinforce delusional thinking. Vulnerable users can spiral into unhealthy mental states if the AI mirrors or validates their fears.

- Legal Scrutiny: OpenAI is currently being sued by the parents of a teenager in California who reportedly took his own life after engaging with ChatGPT about suicidal thoughts.

- Government Concerns: Just this week, two U.S. attorneys general sent letters to AI companies, raising red flags over how companion chatbots could harm mentally unwell users.

For startups, these risks make the AI companion space harder to navigate. Building emotional trust with users is powerful—but it can also be dangerous without guardrails.

Did Dot Really Have “Hundreds of Thousands” of Users?

In its farewell note, the company claimed that Dot had attracted “hundreds of thousands” of users. However, third-party analytics suggest otherwise.

According to data from Appfigures:

- Dot had roughly 24,500 lifetime iOS downloads since launch in June 2024.

- There was no Android version available.

The gap between self-reported engagement and analytics paints a picture of a startup that may have been struggling to scale.

What Happens Next for Users

If you’ve been using Dot, you still have a brief window to save your data. Here’s how:

- Open the Dot app.

- Navigate to Settings.

- Tap “Request your data.”

- Download everything before October 5, 2025.

After that date, the app will shut down permanently, and users will lose access to their conversations.

The closure of Dot underscores a bigger truth: AI companions sit in a delicate space between innovation and risk. They’re not just tools they become part of people’s emotional lives. That makes them harder to build, scale, and sustain responsibly.

For users, Dot’s disappearance may feel more personal than other app shutdowns. It’s not just about losing software it’s about saying goodbye to a “friend.”

The story of Dot serves as both a warning and a lesson. Startups in the AI companion space will need to carefully balance personalization with safety, ethics, and long-term sustainability if they want to succeed where others have stumbled.

SOURCES: ( Techcrunch )